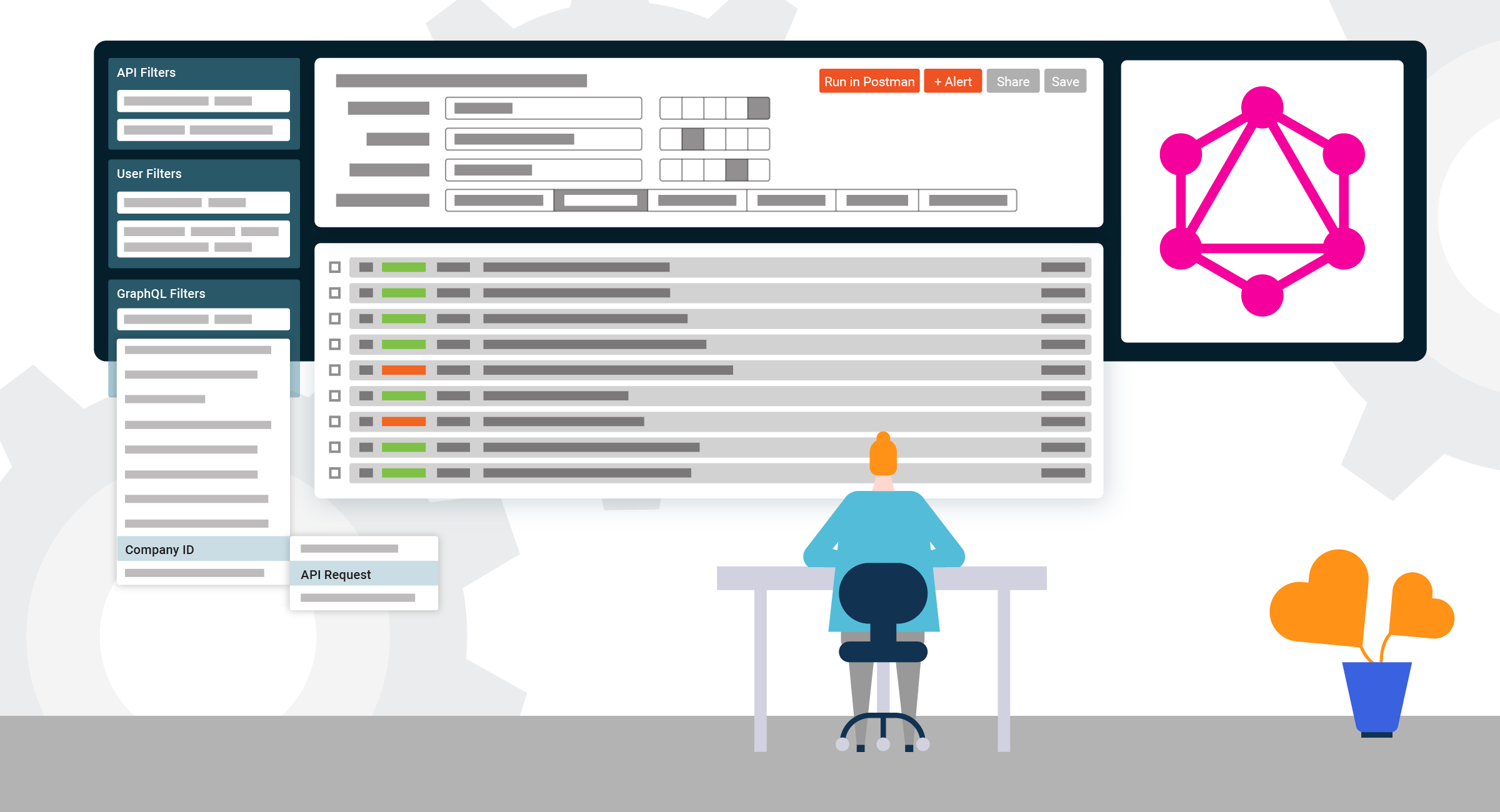

How to Best Monitor GraphQL APIs

Since its release in 2015, GraphQL has become the alternative to REST. It gives frontend developers the flexibility they craved for so long.

Over are the days of begging backend developers for one-purpose-endpoints. Now a query can define all the data that is needed and request it in one go, cutting latency down by quite a bit, at least in theory.

With REST, things were entirely a bit simpler — especially monitoring. The backend team could look at the measurements of every endpoint and see what is happening right off the bat.

With GraphQL, this isn’t the case anymore. There often is only one endpoint and, so measuring per endpoint doesn’t help much. So were are the new places to hook into the system?

In this article, we look into monitoring GraphQL!

GraphQL Architecture

To get an idea of where the interesting points are in our system, let’s look into the potential architectures.

A simple GraphQL system consists mainly of three parts:

- A schema that defines all the data-types

- A GraphQL engine that uses the schema to route every part of a query to a resolver

- One or more resolvers, which are the functions that get called by the GraphQL engine

The GraphQL backend starts by parsing the schema, which gives the server the knowledge about which type is handled by which resolver.

When we send a query to the GraphQL endpoint, it gets parsed by the engine, and, for every requested type in the query, the engine calls our resolvers to satisfy the request.

As we can imagine, this approach only delivers excellent performance when used with simple queries.

Sometimes parts of the query can be interconnected in our data-sources (Data-source means something like a database or third-party API). For example, we are loading a user account and its address. They could be two types in the GraphQL schema, but merely one record in the data source. If we request both in one go, we wouldn’t expect the server to make two requests to the data source.

To get rid of this problem, people started to use a pattern called data-loader.

A data-loader is another layer in our GraphQL API that resides between our resolvers and our data-source.

In the simple setup, the resolvers would access the data-source directly in the more complex iteration, the resolvers would tell a data-loader what they need, and this loader would access the data-source for them.

Why does this help?

The data-loader can wait until all resolvers have been called and consolidate access to the data source.

Did someone want to load the user account and the address? This is only one request to the data-source now!

The idea is, a resolver only knows about its requirements, the data-loader knows what all resolvers want and can optimize the access.

Monitoring GraphQL

As we can see, depending on our architecture, there can be multiple places in which we can monitor our GraphQL API.

- HTTP endpoint

- For all the traffic that hits our API

- GraphQL query

- For each specific query

- GraphQL resolver or data-loader

- For each access to the data source

- Tracing

- Following each query, to the resolvers and data-loaders, they affect

1. HTTP Endpoint

The HTTP endpoint is what we monitored for a REST API. In the GraphQL world, there is often only one, so monitoring on this level only gives us information about the overall status of our API.

This isn’t bad. At least it gives us a starting point. If everything is right here, low latency, low error rates, no customer complains, all green, then we can save time and money by only looking at these metrics.

If something is off, we need to dig deeper.

2. GraphQL Query

The next obvious step would be to look at each query, which can be good enough for APIs that have rather static usage patterns.

If we use our API only with our own clients, it’s often clear that the queries won’t change often, but if our API is available to different customers with different requirements, things aren’t that simple anymore.

Suddenly we can have hundreds of (slightly) different queries that all run slow for some reason or another.

One way to levigate this issue is checking for the most common queries and try to monitor them synthetically. This means we define a bunch of query and variable combinations and run them from test clients that check how long they take when we roll out a new version. This way, we can reduce the risk of creating significant performance regressions with an update. Persisted queries can help with this. They are a way of caching the most used queries.

If things grow over our heads, we need to take another step.

3. Resolvers & Data-Loaders

The best place to monitor what’s happening is often where the rubber hits the road. If we look at the places in our backend that access the data-source, we can get a better grip of reality.

Is the type of data-source we used merely wrong for the access patterns? Do we need a different type of database?

Is our data-source type okay, but we should improve our requests to them? Do we need something like a data-loader if we didn’t already use one?

Do we send requests to external APIs that are too slow? Can we replicate that data closer to our backend?

All these questions can now be asked when we see what and how the data is retrieved in our backend.

Here we also see another benefit of a data-loader. The resolvers only allow us to monitor what one resolver does; the data-loader allows us to see what all resolvers do in one request and additionally allows us to solve inter-resolver-problems after we discovered them.

4. Tracing The Whole Stack

This is the supreme discipline of monitoring. Tagging a query with a tracing-ID when it comes in and pass this ID along when it got translated to resolvers and in turn, data-loaders, maybe even to the data-source. This allows us to use the tracing-ID when logging timings and errors, so we can consolidate them later to get a look at the bigger picture.

The idea here is the following:

While measuring a query might give us some data on how long it took to resolve, the actual data loading is done down the line in the resolvers and or data-loaders and not while parsing the query.

We don’t work with queries anymore when loading the data since one of the core ideas of GraphQL is decoupling the queries from the actual data loading, but it is still lovely to see what happened in the background when someone sends a query.

Conclusion

Understanding how the backend for a GraphQL API is structured gives us more actionable ideas of where to monitor such a system.

Things have certainly become a bit more cumbersome than with REST APIs, but there is nothing magical happening in a GraphQL API, it’s just code we can hook into for different purposes like monitoring.

If we gain visibility in our production systems, questions about caching and error handling also become more clear.