ChatGPT API Pricing (Cost): Everything You Need to Know

Introduction

API pricing is important for developers and businesses alike, as it shapes strategic decisions and resource allocation. As APIs are integral to AI App developers’ frameworks , cost-value alignment in pricing ensures informed choices for organizations and customers alike, preventing unexpected financial hurdles. For AI-based API products like the ChatGPT API, pricing models must offer clarity and flexibility. This allows developers using ChatGPT to anticipate costs, making budget control more feasible. The transparent structure empowers businesses to scale operations efficiently, aligning usage with budgetary considerations.

What is ChatGPT API?

The ChatGPT API is a tool developed by OpenAI which allows for interactive and context-aware conversations. The GPT-3.5 architecture is a powerful tool for software applications like chat-based tools and language-driven tasks.The ChatGPT API enables the integration of OpenAI’s large language models into external applications, products, and services. With the ability to interpret and respond to user inputs, ChatGPT’s API allows developers the potential to create personalized user experiences and enhance customer support.

Understanding the ChatGPT API Cost Structure

In the API ecosystem, transparent pricing plans and structures is a requirement for any company with a monetized tool or service. This emphasis on clarity is particularly crucial for AI based tools and products. Pricing is often the dealbreaker for AI-based APIs because of the inherent expenses incurred when using a resource-hungry tool. Because many companies monetize their AI tools in an effort to offset the operational costs of maintaining their AI framework, pricing must be top of mind for decision makers of API products and API users alike. A clear pricing model enables users to predict and manage costs effectively while avoiding unexpected financial burdens.

Several factors contribute to the overall ChatGPT API pricing model, reflecting the intricacies of the computational demands of AI products. Firstly, the volume of API calls is the fundamental determinant. The pricing model offers a pay-per-volume, usage based approach to billing; it considers the number of requests made to the API, and users are billed accordingly. This reflects the computational resources required to process and respond to each API call, directly impacting the overall cost.

ChatGPT also varies the price of API usage based on which large language model is being used. This is because a critical factor on OpenAI’s end is the processing time or response latency. The quicker the response required, the more computational resources are needed to generate prompt and relevant outputs without compromising the quality of the output.

The complexity of the tasks assigned to the API influences the pricing structure. Detailed language processing tasks that demand a high level of computational power may incur higher costs due to the fact that monetization structure for end users accounts for input and output computation. This reflects the versatility of ChatGPT, as it can handle a spectrum of language-driven applications with varying degrees of complexity and, by a result, cater to varying budgetary constraints. Beyond just the computational costs, the scale of model usage is a key consideration. Different plans or tiers are available to allow users to choose the language model that best aligns with their use case.

Scalability concerns are crucial for AI-based companies to consider, given that they experience fluctuating demand. API providers often offer detailed documentation and pricing calculators. This self-service approach allows developers to estimate costs based on their expected usage patterns of specific models, enabling informed decision-making.

How much does the ChatGPT API cost?

Because the cost of accessing data through the ChatGPTA API fluctuates depending on the model used and complexity of the input, it’s difficult to estimate an example price, as not every use case will be the same. For example, say you have an AI chatbot or virtual assistant tool built using the ChatGPT API. This kind of tool aims to facilitate natural language interactions, helping users gather information or receive assistance through conversation. To build this kind of tool, you’d want access to a conversational AI language model, like GPT-4 in order to process user inputs and generate relevant and coherent responses that are content aware. GPT-4 can follow complex instructions in natural language and solve difficult problems with accuracy, making it a natural choice for a conversation-based tool.

The number of tokens required to keep your product operational using the ChatGPT API depends on the complexity and length of the conversation. With the ChatGPT API, both input and output tokens count towards the total volume (billed by the thousands). Tokens are chunks of text that can be as short as one character or as long as one word. For example, the sentence “Please Schedule A Meeting for 10 AM” consists of seven tokens: [“Please”, “Schedule”, “A”, “ Meeting”, “ for!” “10” “AM”]. The total tokens include the tokens in the user’s input and the tokens in the model’s response, or 7 plus the response. Suppose the language model returns a response that spans another 10 characters, resulting in a 17 token input/output total (but not accounting for the computation on the tool to actually place the meeting on the calendar). Each word (or standalone letter or article, like “the”, “or”, “a”, etc.) is generally considered a token, and in this case, there are seven distinct words.

Longer conversations with more back-and-forth dialogue will have a higher token count. For example, if a user was to ask their AI-enhanced virtual assistant for a meeting but the time slot was already booked, the billing meter would account for the back and forth discussion the end user and virtual assistant would have trying to find a viable time for the meeting.

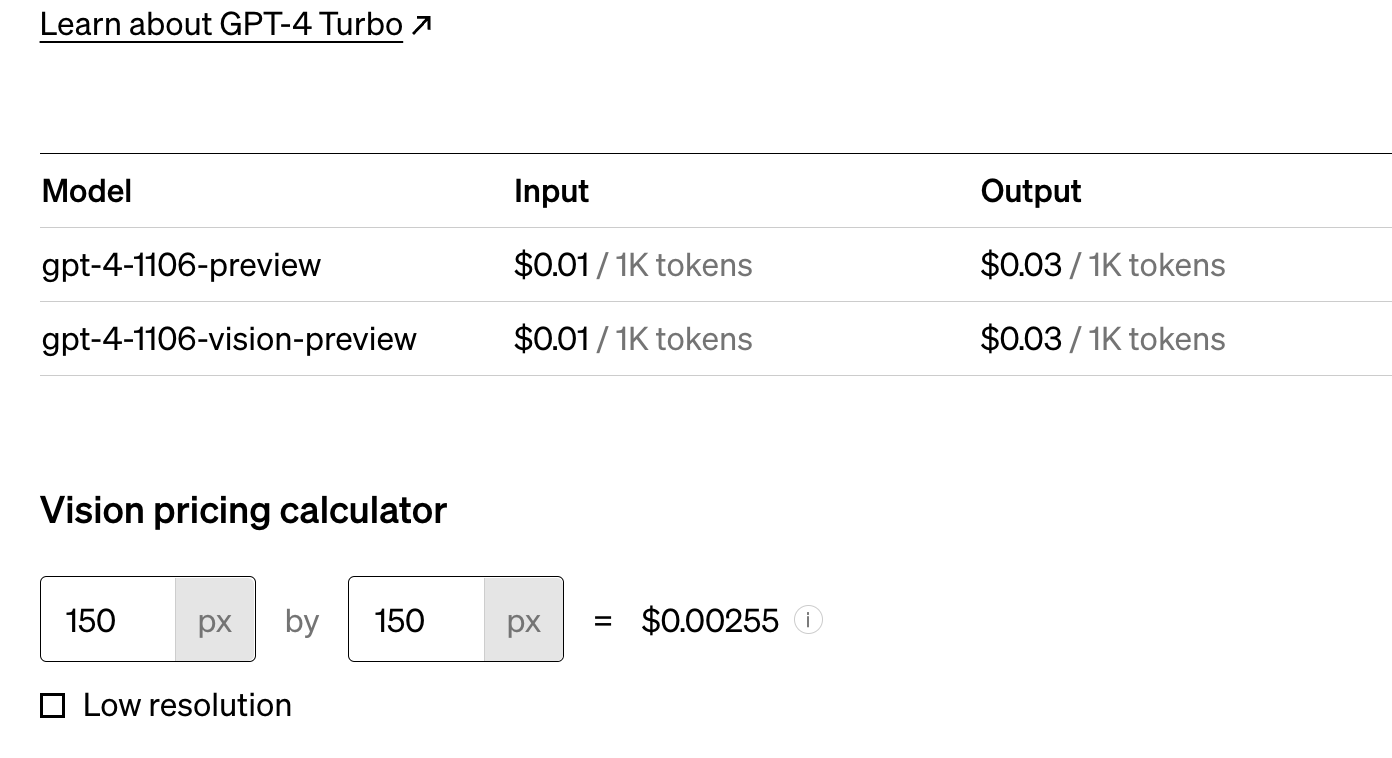

ChatGPT-4 Turbo Cost Estimator from the ChatGPT pricing page.

The ChatGPT API pricing model is based on the total number of tokens processed within a given language model, making it important to manage token usage effectively. Exceeding the token limit will result in higher costs and additional processing time. Developers may need to truncate or omit parts of their requests to fit within the token limits set by the API.

How does the ChatGPT API Pricing Model Work?

Overview of the pricing model

The price for use of the ChatGPT API varies based on a usage-based pricing model. This means users are charged based on the number of tokens processed, including both input and output tokens. These tokens, or chunks of text, and the total tokens in a conversation (between user and the AI model) determine final costs. This straightforward pricing structure means users pay only for the computational resources used to process and generate responses. This flexibility makes the ChatGPT API cost a reasonable choice for developers looking for a scalable generative AI solution. Each API call generates costs based on the length and complexity of the call and response. This means that developers must manage token usage to stay within allocated limits and avoid unexpected financial stress due to overuse of the API.

Explanation of tiered or usage-based pricing

The ChatGPT API is a classic example of usage-based pricing, offering access to different language models which cater to varying user needs. While tiered structures often provide users the chance to choose plans based on usage volume or specific features, the ChatGPT API offerings all fall under the “Pay-As-You-Go” category. PAYG refers to a pricing plan in which customers are charged based on their actual usage of a product or service. For API products, including the ChatGPT API, a PAYG model means that users are billed for the resources they consume, such as the number of API calls made, or (in the case of ChatGPT and other artificial intelligence models) the number of tokens utilized.

In addition to this, OpenAI offers ChatGPT Plus, a subscription plan that provides enhanced access to the ChatGPT service. For $20 per month, ChatGPT Plus offers a premium version of the ChatGPT model, such as access during peak times, faster response time, and priority access to new features and improvements. The aim is to provide a premium experience as a paid version for subscribers who may not be good candidates for the traditional PAYG pricing structure, opening the AI tool up to more users with smaller budgets.

Potential discounts or pricing variations based on usage

Currently, ChatGPT does not offer discounts or coupons to users. However, given that developers can contact sales or independently add tokens to their account, it is reasonable to believe that usage limits and pricing can be discussed with the OpenAI team. At the very least, ChatGPT can be used to find external discounts, freeing up your budget to directly contribute to your ChatGPT budget.

ChatGPT API Cost Components

The overall cost of using the ChatGPT API is influenced by several factors.

- API Calls: The number of API calls is the deciding factor influencing the cost of access for the ChatGPT API, no matter which model you are using. Each time a user makes a request to the ChatGPT API, it incurs a charge. This means that higher usage levels result in increased costs.

- Token Usage: The way ChatGPT processes data is based on tokens. These tokens are units of text (at times even a single letter) processed by a given model, including both input from the user and output from the model. The total number of tokens in a conversation contributes directly to the cost of the API call. This is why OpenAI allows you to set usage limits within your user dashboard, to avoid exceeding predefined token limits and mitigate additional charges.

- Response Time: The response time or latency requirement is another consideration. Some applications may necessitate faster responses, and certain pricing plans like ChatGPT Plus offer expedited processing for a higher fee. Faster response times require additional computational resources, impacting the overall cost on the API provider’s end. This is directly reflected in the cost of the ChatGPT Plus subscription.

- Additional Features: Some API providers offer additional features or premium functionalities that can impact the overall volume of their ChatGPT usage. These features may include advanced language processing capabilities or specialized tools. Users opting for such features should consider contacting sales directly to understand the true costs of offering these services built off ChatGPT.

Conclusion

Developers and businesses should consider the flexible pricing model of the ChatGPT API to maintain optimal cost and usage efficiency. With a volume-based pricing structure, ChatGPT users are able to make informed decisions to predict and manage costs effectively. By understanding the interconnected components of harnessing ChatGPT’s power such as API calls, token usage, and ChatGPT Plus, users can tailor their plans to align with specific use case requirements and budget constraints.

The flexibility of the ChatGPT API pricing accommodates diverse workloads, making it adaptable to fluctuating usage patterns and programmatic needs. Developers can monitor and control their usage directly within the ChatGPT user dashboard, avoiding unexpected charges and optimizing their budget allocation. In addition, increasing these rate limits is done on the user side, making ChatGPT a truly self-service option for API developers. The availability of different pricing tiers based on different language models enables users to access features and models that are pertinent to their project, ensuring a customizable and scalable experience.

As the API ecosystem continues to evolve, it is clear that AI based API products will become more foundational for developers creating dependent applications. By understanding the interconnected and flexible pricing of the ChatGPT API, developers and businesses can be prepared with the knowledge needed to empower them to make strategic decisions that optimize usage efficiently in their language-driven applications.