How to Best Plan Usage-Based Pricing For AI Agents

The rise of AI agents has reshaped software economics; businesses have been increasingly adopting them for efficiency, scale, and delivering values faster. However, pricing them has remained a hard problem.

By the established norms, you would tie cost to headcount or access, but that doesn’t fit; traditional methods misalign with how agents deliver value. And newer approaches often create more confusion than clarity. If you are a buyer, your concern might be fairness, and vendors worry about protecting their margins, while both sides are trying to define what “value” actually means.

Irrespective of your industry and customer demographic, the best pricing model should tie cost to real, observable activity while staying flexible as the market matures. So this article will try to examine why AI agents are uniquely difficult to price, and propose some strategic guidelines to best implement usage-based schemes using Moesif.

Implement Predictable Pricing for AI Agents

14 day free trial. No credit card required.

Try for Free

Implement Predictable Pricing for AI Agents

14 day free trial. No credit card required.

Try for Free

What are AI Agents?

AI agents are software systems that can autonomously plan and execute tasks with minimal human intervention. They are powered by large language models (LLMs) and have integrations with external tools or APIs. These agents autonomously decompose goals into smaller steps, call the right functions, and iterate until a task is complete.

Contrary to traditional apps with predefined workflows, AI agents adapt in real time. They can sequence multiple tool calls, fetch context from external data sources, and refine outputs dynamically.

Why Pricing AI Agents is Difficult

The technical nature of how agents operate introduces variability and uncertainty that conventional SaaS models by design cannot accommodate:

Volatile Cost of Goods Sold

Every agent invocation consumes resources; they can be tokens, compute power, retrievals, and sometimes external APIs that charge per call. The cost profile of a request can swing widely depending on prompt size and complexity, sequence depth, or cache reuse. If you don’t meter carefully, you risk profit margin or unpredictable unit economics.

Non-Deterministic Tasks

An evidently simple user prompt may result in dozens of tool calls, retries, or checks. For example, you can ask an AI agent to summarize a document. However, it might end up with multiple queries, embeddings generation, and multiple LLM passes. The workload variance makes it difficult to standardize what constitutes a “unit of work” in billing.

Seat-Based Pricing No Longer Fits

AI agents promise automation and efficiency, replacing seats rather than adding them. Charging per seat also shrinks the revenue base as the product becomes more valuable.

Seat in traditional software is a proxy for value: a human using the tool to create value. In an agentic AI model, the AI itself creates value; a seat-based scheme can massively understate the AI’s output. Customers instead expect pricing to map to the savings or outputs delivered.

Immaturity in ROI Attribution

Charging per fraud prevented, ticket resolved, or sale closed—charging for outcomes like these are appealing but hard to operationalize. How can you prove that the AI agent directly caused the outcome? Most companies don’t yet have the telemetry, baselining, and customer trust for that. Until attribution improves, usage often becomes the only tenable stand-in for value.

The Pricing Model Spectrum for AI Agents

Here are the dominant pricing archetypes we can observe in current market trends:

Seat-Based

Seat pricing mirrors classic SaaS and is often the easiest to buy through existing procurement motions. It fits, for example, augmentation tools where humans remain primary operators and usage is broadly correlated with headcount.

Risks

- Seat compression when agents replace human efforts, shrinking the revenue base

- Poor cost alignment if a few power users drive disproportionate compute usage

Agent-Based

It charges per named agent or agent type, essentially mapping budget to digital labor. This model works when AI agents encapsulate a role, like a support representative, and carry clear SLAs.

Risks

- Compute costs can grow while revenue stays flat

- Lesser used agents may appear overpriced and reduce renewal rates

Outcome-Based

This model charges customers based on what agents deliver:

- Jobs completed: operational KPIs like tickets resolved, workflows finished successfully

- Financial outcomes: cost savings, revenue generated

Risks

- Attribution dispute over whether the agent directly brought about the outcome

- External factors like market conditions may affect results outside vendor’s control

- Overhead for defining outcomes and arbitrating disagreements

Credit-Based

Abstracts heterogenous costs (tokens, tool calls, RAG) into a credit system with a burn table. Buyers pre-purchase credits and spend them as the agent works. This model simplifies complex agent runs and gives a visible usage balance.

Risks

- Conversion rates can feel ambiguous without clear communication

- Rollover credits can accumulate and impact revenue predictability

- Unclear credit burn rules frustrate costumes; balance may deplete faster than expected

Usage-Based

Charges by measurable consumption:

- Resource-centric: aptly ties to cost of goods sold; for example, per 1k tokens, per compute minute

- Interaction-centric: easier for customers to audit and predict; for example, per request, per conversation

Risks:

- Bill surprise without thresholds or alerts, especially when workloads upturn

- Potential disputes if customers can’t easily audit usage or understand the metric behind

Hybrid Models

Most vendors combine a base amount with included usage and overage, sometimes through credits for simplicity. Hybrid pricing models protect MRR while letting revenue scale with adoption. They are often a practical solution toward outcomes as attribution matures.

Risks

- Poorly designed thresholds can frustrate customers who frequently overrun or underuse limits

- Complexity in explaining plan details to buyers

The Case for (and Against) Usage-Based Pricing

Usage-based pricing, although pragmatic, doesn’t work in every situation for monetizing AI agents.

When Usage-Based Pricing Works

- Outcomes Cannot be Substantiated Yet

- Most companies lack the telemetry and attribution tooling to charge directly for outcomes like “sales closed” or “fraud detected”. Usage provides a measurable, auditable proxy until outcome-based models mature. You can defend more easily in negotiations because logs demonstrate the exact consumption.

- Costs are Material and Variable

- Agent invocations always have associated costs from tokens, context length, tool chaining, and external API calls. These inputs can fluctuate a lot across requests. To protect gross margins while still scaling with adoption, vendors must align revenue with these cost drivers.

- Customers Value Elasticity

- Customers value flexibility: they want to start small, experiment, and then grow without having to commit to immutable seat counts. Usage-based pricing adapts naturally to different consumption levels. With clear usage caps and alerts, it can offer predictability while retaining the elasticity buyers covet.

When To Avoid Usage-Based Pricing

- Workloads are Too Unpredictable

- It might be difficult to predict cost if agent behavior is highly fluctuating or opaque. It can damage confidence, even if the pricing model is fair.

- Outcomes are Well-Defined

- Mature domains like support resolutions or invoice processing have clear, explicit outcomes. So outcome-based pricing may align better with customer-perceived value; it also means less disputes over whether usage matches the impact.

Designing the Usage Meter: Identifying and Measuring Billable Usage

The billable metric for usage-based model must be clear, predictable, and auditable; otherwise you risk losing customer trust and billing disputes. A defensible billing meter balances technical feasibility, cost alignment, and customer comprehension.

Picking the Right Unit of Usage

The best billing metric is the one that customers can understand, forecast, and validate, for example:

- Per request: Clean and auditable, but may obscure underlying cost variance between “light” and “complex” requests

- Per 1k tokens: Aligns revenue with model costs, though tokens are less intuitive for non-technical customers

- Per tool call: Great fit for multi-agent systems where each tool invocation drives incremental cost. However, you must carefully exclude or discount retries and validation runs

Handling Edge Cases

Without guardrails, edge cases can skew invoices and cause disputes:

- Retries and timeouts: A failed or repeated request should not double-charge the customer

- Cache hits: Cached responses reduce actual compute, so customer shouldn’t be charged full price

- Background agents: Long-running or autonomous background tasks must have explicit billing rules to avoid unbounded costs

- Evaluation or safety runs: Extra runs might be carried out to verify an agent’s output; for example, checking accuracy or screening for harmful content. While important for reliability, customers rarely accept them as billable units

A well-designed meter defines which events count towards billing and which are absorbed as part of platform overhead.

Multiple Meters and Normalization

You can have multiple billing meters in place. In Moesif, you can create as many billing meters as you need and activate them selectively. Multiple meters doesn’t mean double or multiple charging. Rather, it helps balance fairness and sustainability:

- A workflow fee may already account for average token usage. You can establish another billing meter to track token data for visibility or overages.

- You might have one unit of usage that anchors pricing, like workflows, while another sets boundaries. For example, workflows may include up to N tokens; beyond that, you apply token-based overages.

- The ultimate goal is to protect margins from heavy users while keeping bills predictable and customer-friendly

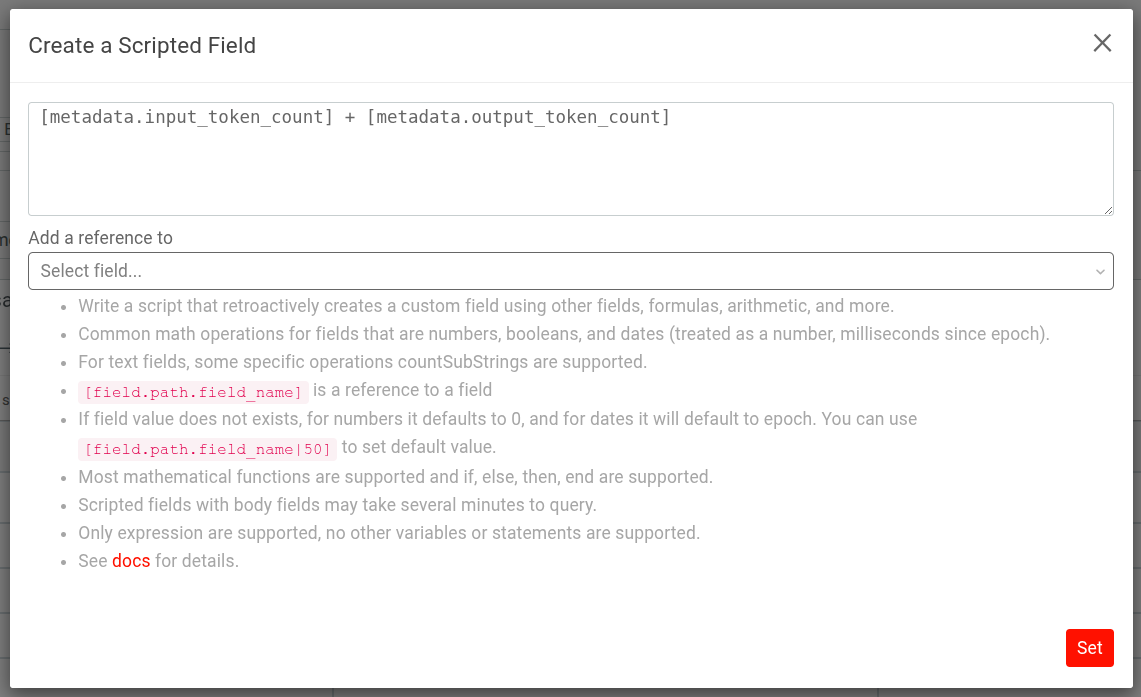

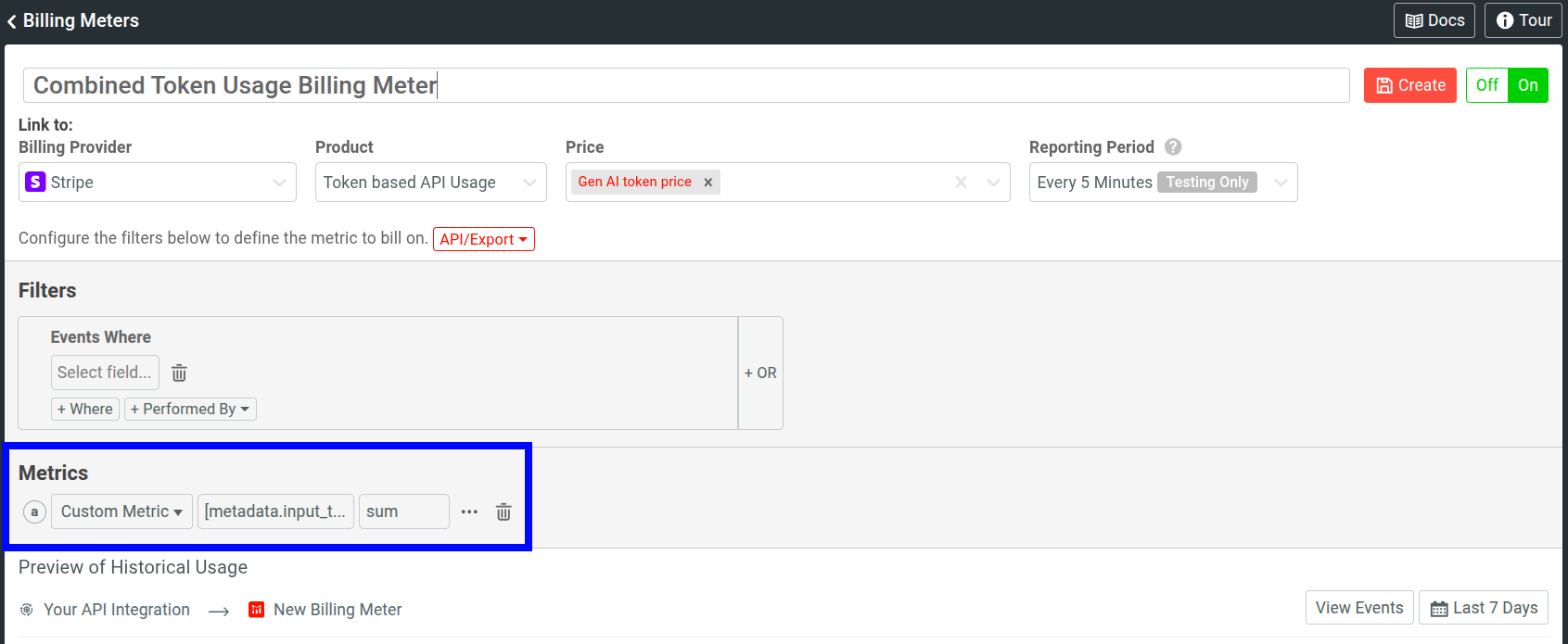

You can use Moesif’s Scripted Fields to transform messy or inconsistent data into clean billable metric. You can combine data to compute a metric; for example, combining input and output tokens:

Then specifying it as the billable metric in a billing meter:

Scripted Fields also allows you to normalize different data across services through arithmetic formulas and conditional expressions.

Keeping Usage-Based Pricing Predictable and Trustworthy

Usage-based pricing works when:

- Data is clean enough to bill on

- Customer can clearly see what they are paying for

And to that end, a structured and disciplined approach to capturing data with built-in controls and customer-facing info go a long way.

Capture the Right Data

-

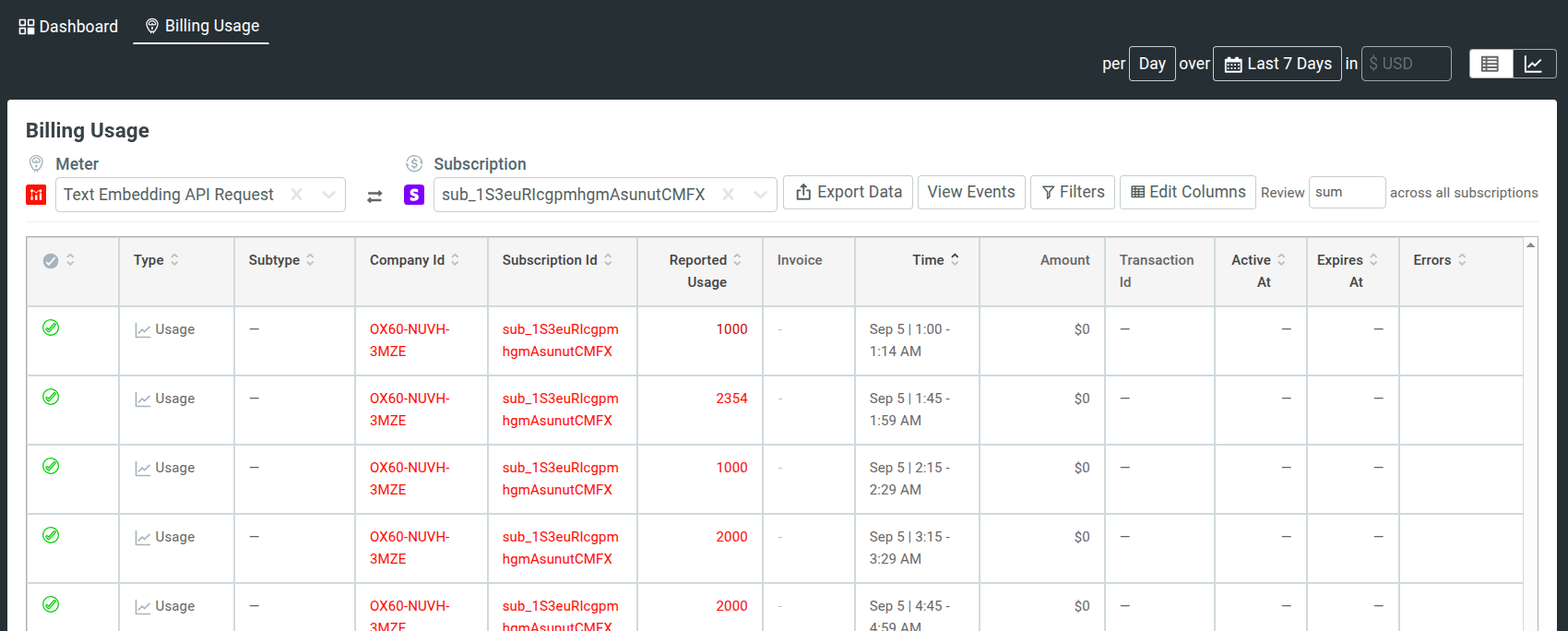

Link each customer-visible charge to specific requests and related steps or sub-steps so you can reconstruct any invoice line without conjecture. In Moesif, you can access a customer’s billing usage statistics in their Profile View.

![Moesif's company profile view showing billing usage statistics of a customer for a specific billing meter and subscription in the past 7 days. Moesif showing billing usage statistics of a customer for a specific billing meter and subscription]()

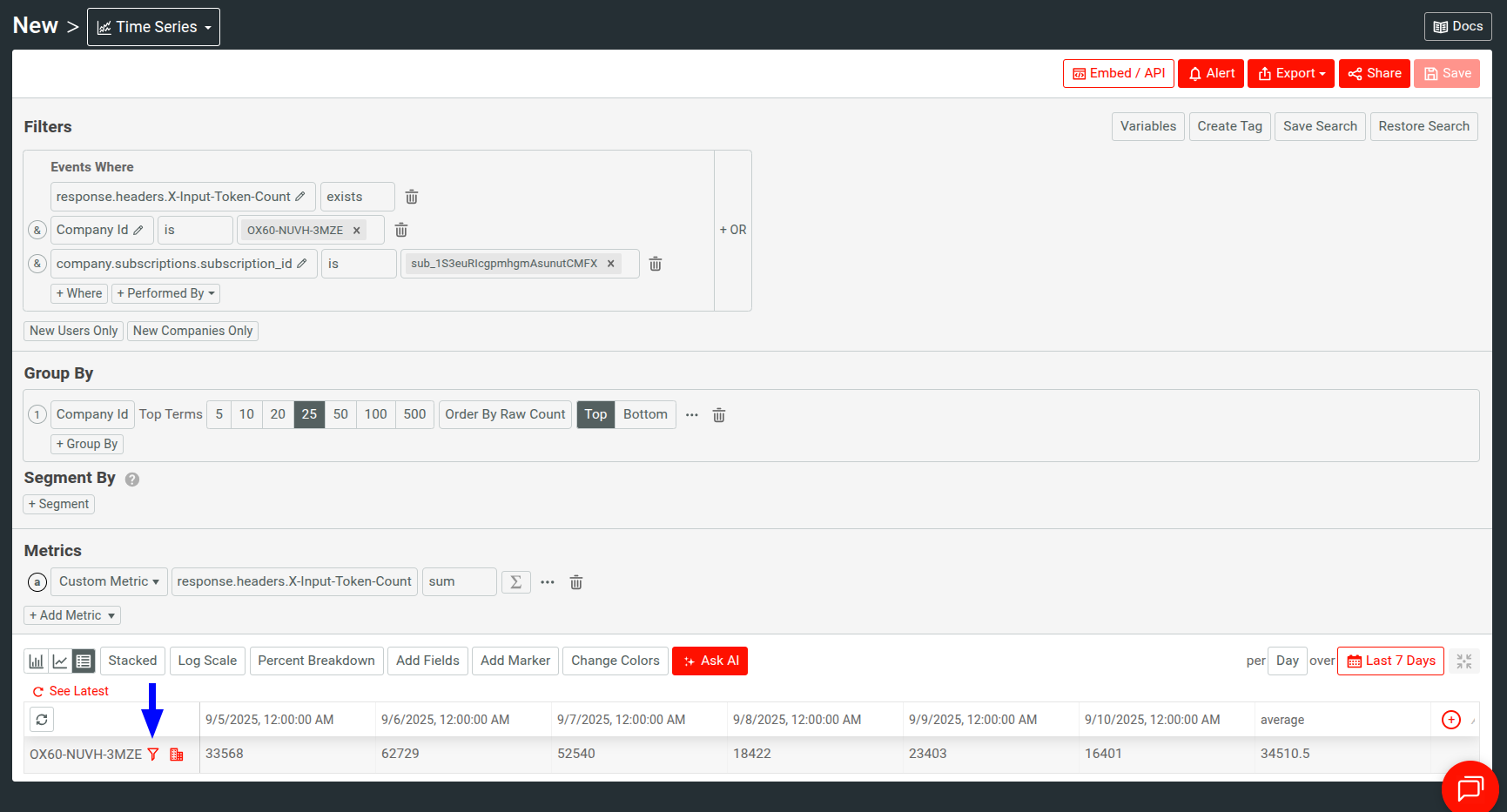

From here, you can dig deeper into usage and associated costs. For example, you can interact with a reported usage amount to open a time-series of their usage:

![Moesif showing a time-series analysis of a customer's billing usage in the past 7 days, with daily billable consumption amounts. Moesif showing a time-series view of a customer's billable consumption in the past 7 days.]()

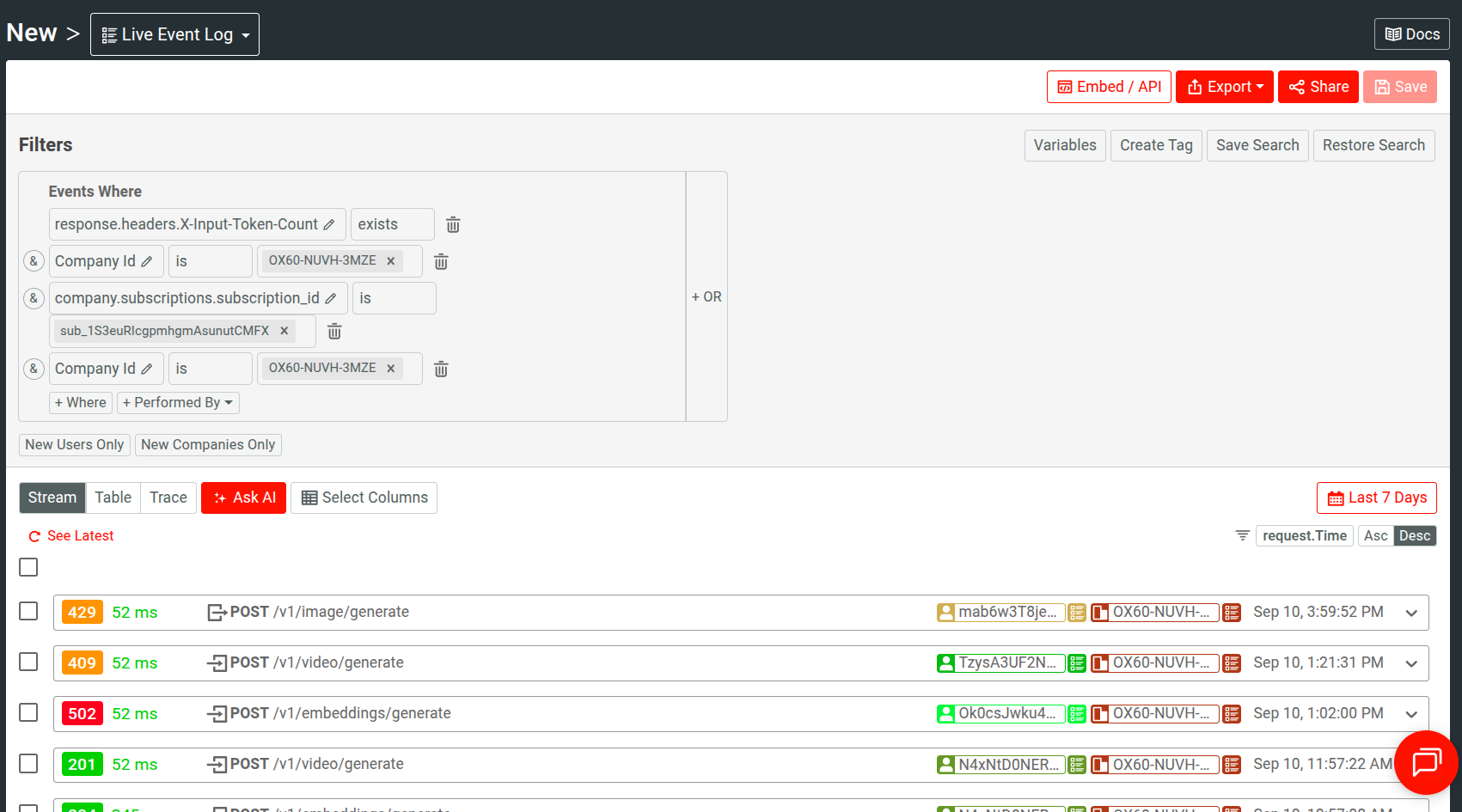

Then click the funnel icon to open the associated events responsible for the reported usage and therefore the specific charge:

![Moesif's Live Event Log showing events associated with a customer's reported usage under a subscription. A Live Event Log in Moesif showing events associated with a customer's reported usage under a subscription.]()

Moesif automatically assigns a session token to each API event for correlation and reliability; and the OpenTelemetry support further helps keep end-to-end traces.

- Make sure to log sufficient metadata or contextual information for each invocation: user ID, company ID, agent, tool, tenant ID, tokens input/output, model type, and so on.

- Ensure consistent event definitions; change in field names or meanings without notice can shift invoices even though usage hasn’t.

- Moesif supports custom actions so you can explicitly define custom start-and-stop points to identify successful workflow runs.

- Live Event Log in Moesif can help with testing and validating whether or not you’re capturing the usage data you want to.

Include Guardrails

- Exclude retries, timeouts, discount cache hits; you don’t want to charge your customers for background and housekeeping tasks. Same goes for incomplete workflows, failed validations, and so on.

- Add soft thresholds with alerts and hard stops to prevent runaway costs from misconfigured agents, for example, using Moesif’s real-time API monitoring and alerting.

- Watch for spikes, deep sequences, or sudden cache miss rates at the tenant level; Moesif can help you set up real-time and calendar-based dynamic alerts to watch over those anomalies.

- Alongside your gateway-specific governance features, make use of Moesif’s customer cohorts, quotas, and governance rules to ensure structured, safe, and transparent usage.

Make Usage Visible to Customers

- Show real-time consumption and approximate monthly cost.

- Dispatch alerts with prudence about usage thresholds and plan renewals; this protects your customers from unexpected surprises.

You can use Moesif developer portal and Embedded Templates to provide your customers self-service solutions.

How to Pilot, Validate, and Iterate on Pricing

Agentic AI workloads are unpredictable, and therefore can shift customer expectations quickly. So it’s very important to validate your usage-based system before rolling out to production.

Make Use of Historical Data

Moesif’s Billing Report Metrics can provide insights into historical trends to help evaluate your agent pricing strategy. You can visualize usage across your existing billing meters and slice and dice that data by different criteria for insights like:

- Does the model sustain margins without overcharging?

- Do similar customers pay similar amounts?

- Do bills spike unpredictably for certain cohorts?

It also allows you to export your analysis data for external analysis, auditing, and scenario testing.

Pilot with Real Customers

You can run pilot programs; select a small cohort of customers, set a time, and define your success criteria:

- Business metrics like revenue health, churn risk, customer adoption

- Customer experience: transparency into how changes are calculated

Pilots help you validate the metric you have chosen is both fair to customers and sustainable for the business.

Moesif provides a robust set of customer and product-centric analytics. Combining dashboards and workspaces and App, you can isolate and organize analytics for such pilot programs.

Iterate with Confidence

After pilots, compare across churn, gross margin, and cohort health. You may need to redefine the billable metric or set new thresholds; do those incrementally with clear versioning and communicate with your customers.

Moesif’s Billing Report Metrics can support your iteration and ongoing monitoring by aggregating financial flows across your active meters.

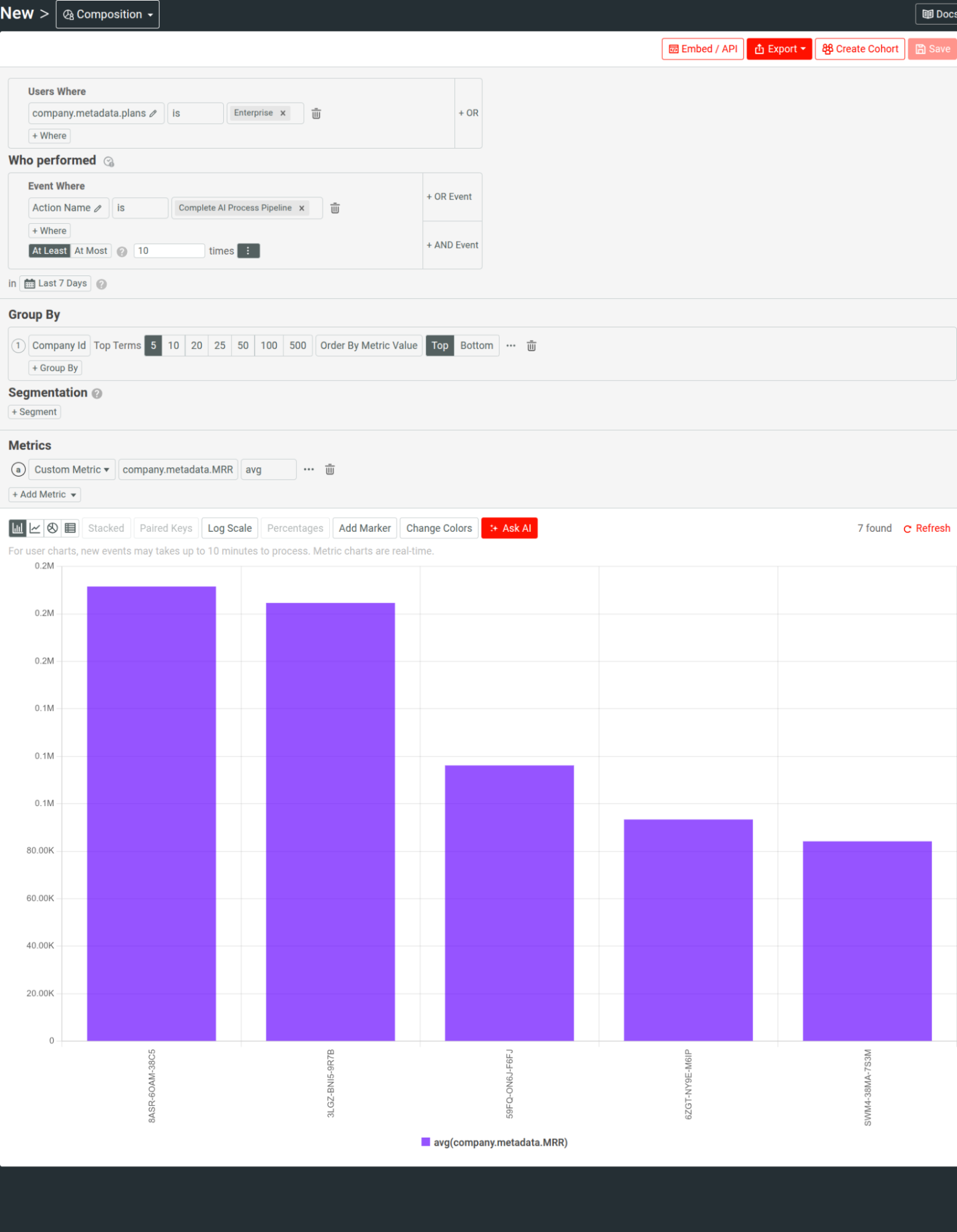

For example, here, a Composition analysis looks at the MRR from Enterprise customers that have successfully completed a workflow at least 10 times in the past 7 days:

Build a Continuous Review Loop

Your pricing should keep up and evolve with how AI workloads and model costs do. So schedule regular reviews to re-run scenario tests, analyze profit margins, and check customer sentiment.

Conclusion

The huge potential of AI agents can be attributed to how they represent a type of convergence of software, services, and automation. When implementing a monetization strategy around them, companies need to keep that mind. Usage-based pricing, when done right, can provide a reliable foundation for such a strategy. It can be flexible enough for the unpredictability of agentic workloads, while transparent and intuitive to customers about consumption and the associated cost.